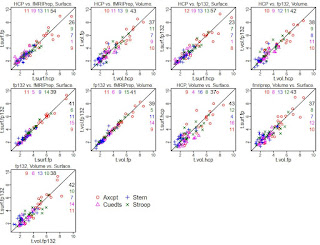

This post is the second in a series, which begins with this post. In that first post I included examples of the estimated HDR curves for a positive control analysis, one of which is copied here for reference.

What are the axis labels for the estimated HDR curves? How did we generate them? What made us think about changing them?

At the first level the (curve) axis label question has a short answer: y-axes are average (across subjects) beta coefficient estimates, x-axes are knots. But this answer leads to another question: how do knots translate into time? Answering this question is more involved, because we can't translate knots to time without knowing both the TR and the afni 3dDeconvolve command. The TR is easy: 1.2 seconds. Listing the relevant afni commands is also easy:

3dDeconvolve \

-local_times \

-x1D_stop \

-GOFORIT 5 \

-input 'lpi_scale_blur4_tfMRI_AxcptBas1_AP.nii.gz lpi_scale_blur4_tfMRI_AxcptBas2_PA.nii.gz' \

-polort A \

-float \

-censor movregs_FD_mask.txt \

-num_stimts 3 \

-stim_times_AM1 1 f1031ax_Axcpt_baseline_block.txt 'dmBLOCK(1)' -stim_label 1 ON_BLOCKS \

-stim_times 2 f1031ax_Axcpt_baseline_blockONandOFF.txt 'TENTzero(0,16.8,8)' -stim_label 2 ON_blockONandOFF \

-stim_times 3 f1031ax_Axcpt_baseline_allTrials.txt 'TENTzero(0,21.6,10)' -stim_label 3 ON_TRIALS \

-ortvec motion_demean_baseline.1D movregs \

-x1D X.xmat.1D \

-xjpeg X.jpg \

-nobucket

3dREMLfit \

-matrix X.xmat.1D \

-GOFORIT 5 \

-input 'lpi_scale_blur4_tfMRI_AxcptBas1_AP.nii.gz lpi_scale_blur4_tfMRI_AxcptBas2_PA.nii.gz' \

-Rvar stats_var_f1031ax_REML.nii.gz \

-Rbuck STATS_f1031ax_REML.nii.gz \

-fout \

-tout \

-nobout \

-verb

Unpacking these commands is not so easy, but I will try to do so here for the most relevant parts; please comment if you spot anything not quite correct in my explanation.

First, the above commands are for the Axcpt task, baseline session, subject f1031ax (aside: their data, including the event timing text files named above, is in DMCC13benchmark). The 3dDeconvolve command has the part describing the knots and HDR estimation; I included the 3dREMLfit call for completeness, because the plotted estimates are from the Coef sub-bricks of the STATS image (produced by -Rbuck).

Since this is a control GLM in which all trials are given the same label there are only three stimulus time series: BLOCKS, blockONandOFF, and TRIALS (this is a mixed design; see introduction). The TRIALS part is what generates the estimated HDR curves plotted above, as defined by TENTzero(0,21.6,10).

We chose TENTzero instead of TENT for the HDR estimation because we do not expect anticipatory activity (the trial responses should start at zero) in this task. (see afni message board, e.g., here and here) To include the full response we decided to model the trial duration plus at least 14 seconds (not "exactly" 14 seconds because we want the durations to be a multiple of the TENT duration). My understanding is that it's not a problem if more time than needed is included in the duration; if too long the last few knots should just approximate zero. I doubt you'd often want to use a duration much shorter than that of the canonical HRF (there's probably some case when that would be useful, but for the DMCC we want to model the entire response).

From the AFNI 3dDeconvolve help:

'TENT(b,c,n)': n parameter tent function expansion from times b..c after stimulus time [piecewise linear] [n must be at least 2; time step is (c-b)/(n-1)]

You can also use 'TENTzero' and 'CSPLINzero',which means to eliminate the first and last basis functions from each set. The effect of these omissions is to force the deconvolved HRF to be zero at t=b and t=c (to start and and end at zero response). With these 'zero' response models, there are n-2 parameters (thus for 'TENTzero', n must be at least 3).

The first TENTzero parameter is straightforward: b=0 to start at the event onset.

For the trial duration (c), we need to do some calculations. Here, the example is the DMCC Axcpt task, which has a trial duration of 5.9 seconds, so the modeled duration should be at least 14 + 5.9 = 19.9 seconds. That's not the middle value in the TENTzero command, though.

For these first analyses we decided to have the TENTs span two TRs (1.2 * 2 = 2.4 seconds), in the (mostly) mistaken hope it would improve our signal/noise, and also to make the file sizes and GLM estimation speeds more manageable (which it does). Thus, c=21.6, the shortest multiple of our desired TENT duration greater than the modeled duration (19.9/2.4 = 8.29, rounded up to 9; 9*2.4 = 21.6).

Figuring out n requires a bit of mind bending but less calculation; I think the slide below (#5 in afni06_decon.pdf) helps: in tent function deconvolution, the n basis functions are divided into n-1 intervals. In the above calculations I rounded 8.29 up to 9; we want to model 9 intervals (of 2.4 s each). Thus, n = 10 basis functions gives us the needed n-1 = 9 intervals.

Recall that since we're using TENTzero, the first and last tent functions are eliminated. Thus, having n=10 means that we will get 10-2=8 beta coefficients ("knots") out of the GLM. These, finally, are the x-axes in the estimated GLM curves above: the knots at which the HDR estimates are made, of which there are 8 for Axcpt.

(Aside: 0-based afni can make some confusion for those of us more accustomed to 1-based systems, especially with TENTzero. The top plots put the value labeled as #0_Coef at 1; a point is added at 0=0 since TENTzero sets the curves to start at zero. I could also have added a zero for the last knot (9, for this Axcpt example), but did not.)

These GLMs seem to be working fine, producing sensible HDR estimates. We've been using these results in many analyses (multiple lab members with papers in preparation!), including the fMRIprep and HCP pipeline comparisons described in previous posts. We became curious, though, if we could set the knot duration to the TR, rather than twice the TR (as done here): would the estimated HDR then follow the trial timing even more precisely? In the next posts(s) I'll describe how we changed the GLMs to have the knot duration match the TR, and a (hopefully interesting) hiccup we had along the way.

UPDATE 4 January 2021: Corrected DMCC13benchmark openneuro links.