This post describes a direct comparison of the carrying out the same ROI-based task-classification MVPA using both surface and volume data. It uses the working memory task fMRI data collected in the HCP, which should be some of the best-preprocessed data available now, with the Gordon, et al. (2014) communities as ROIs. Since the Gordon analyses were also done on the surface, I expect these masks to be especially suited for surface analyses. The Gordon parcellation is available perfectly aligned to the HCP volume and surface data, so no transformations (resampling, warping, etc.) are needed.

My intent was to design these analyses to be as "apples-to-apples" as possible, allowing direct comparisons between the surface and volume results. The analyses in this post were carried out in two (non-intersecting) sets of unrelated people (190 in group 1, 160 in group 2) from the 500-subjects HCP data release. I used ten-fold cross-validation in each group (leave-19-out for group 1; leave-16-out for group 2), determining the cross-validation folds ahead of time, so the same people made up the training and testing sets for both the volume and surface analyses and all four pairwise classifications (my intent being to eliminate as many non-surface or volume-related sources of variation as possible). All classifications were with linear SVM, c=1, on the HCP-provided cope images ("parameter estimate images", in my usual parlance), one per person per class (here, 0BK_FACE, OBK_PLACE, 2BK_FACE, 2BK_PLACE).

The analyses consisted of four pairwise classifications in each group of subjects and type of image (surface or volume), taken from the working memory task. Briefly, the working memory task was a blocked version of the n-back, with blocks of either 0-back or 2-back, performed with different categories of pictures. I can thus classify both the picture type used in the block (here, face or place) or the n-back level (0 or 2), and make sensible predictions (since these are well-understood tasks), such that the Visual community should classify face vs. place much better than 0-back vs. 2-back.

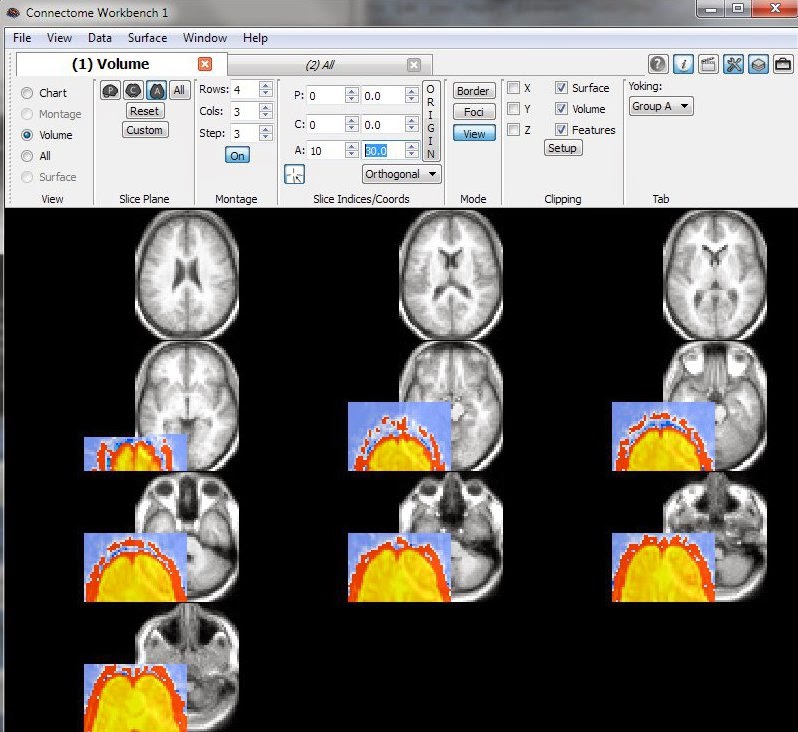

These busy graphs show the results. The first graph has the results using the Group 1 subjects, and the second, the Group 2 subjects (i.e., the replications: same analyses in the two groups of people). The accuracies from analyzing the volume data are plotted as solid lines with dots, surfaces, dashed lines with xs. The abbreviations along the x-axis are the 13 communities in the Gordon2014 parcellation. There are two points for each community, left hemisphere on the left side of the line, right hemisphere on the right. The shaded area below 0.6 accuracy is since these accuracies are unlikely to be meaningful (i.e., interpreted as chance).

The classification results are sensible: the Visual community classifies face vs. place extremely well; SMmouth doesn't classify anything; FrontoParietal classifies 0-back vs. 2-back. There's some variation in the details between the two replications (e.g., right-hemisphere None classifies better than left in Group 1, but about the same in Group 2), but the basic pattern of which communities do which classifications is the same in both.

For the surface and volume comparisons, my main impression of the results is of similarity: the accuracies produced from the surfaces and volumes tend to be very close for each community and classification (in the graphs, the dashed and solid lines of each color follow each other). It looks like the difference between the surface and volume versions is less than the difference between whether the analysis was run on Group 1 or 2 (the replications), making me conclude that the surface and volume versions classified about the same - basically equivalent results either way.

Should this be surprising? Perhaps not: I used the same communities in each analysis, so it's reassuring that they reported basically the same information in the surface and volume versions. The volumetric Gordon community masks closely follow the grey matter (as do the surface reconstructions, of course), so we'd hope they capture the same brain areas. The results would presumably vary more if "lumpier" volumetric ROIs were projected onto the surface.

The number of voxels (volume) in each community is larger than the number of vertices (surface), particularly for larger communities, as shown here (grey line is x=y). This has implications for classification accuracy when using linear SVMs (like I did here), since they can be more likely to detect information when there are more weakly-informative features: more voxels could give an advantage to the volume-based analyses, if they were (even weakly) informative.

We might guess that the surface version would do better than the volume, if the volume included uninformative voxels that weren't assigned a surface vertex, but that doesn't seem to have happened here (perhaps because of the tight grey matter alignment in the volume masks), at least not enough to reduce the accuracy. We might also guess that the surface version would be worse than the volume, for example if preprocessing caused some activity to appear to be in adjacent non-grey-matter areas (which then wouldn't be included in the surface version). {The volumetric BOLD signal is blurred in space during preprocessing (e.g., motion correction and spatial normalization), since preprocessing is usually (including for the HCP) done volumetrically, before the conversion to surfaces.} But in this case, the surface and volume versions came out about the same.

So, can we answer the question I posed at the beginning of this post? How should we do a "standard" ROI-based MVPA: on the surface or in the volume? One set of comparisons can't answer the question definitively, of course, and the HCP data is unusual in many respects (e.g. multiband acquisition, small voxels, specialized preprocessing pipelines). There wasn't an advantage to doing the analyses on the surface here, or much of a disadvantage, since the HCP provides surface versions of the copes. But I'd be hesitant to recommend doing MVPA with fairly large ROIs (like the Gordon communities) on the surface in a new study, particularly if you had to generate the surface files yourself: that's a lot of extra work (making the surface versions) for what might be very little benefit.

Many more comparisons are needed to provide general guidance for when analyses should be done on the surface or volume. I'm particularly interested in trying more comparisons with dilated ROIs: does accuracy improve if voxels in adjacent non-grey matter are included in the mask? If so, we may be better with analyzing the volume. If you run or run across more comparisons, please let me know, and I'll add them here.

The MVPA results here were done in R, similarly to the ROI-based demo. I followed the steps here to read the functional data out of the surface (cifti) files, using Guillaume Flandin's MATLAB library to read the extracted gifti files and get the values for the vertices corresponding to each Gordon parcel. The post is hopefully detailed enough to allow running similar comparisons; I can provide details to replicate exactly if needed.